David Wortley, once the Director of the Serious Games Institute, has done a nice write-up of my early experiments on Data Visualisation in Second Life. You can read it here:

16 December 2021

11 November 2021

An AI Landscape — What Do You Mean by AI?

I've updated my AI Landscape piece as I find myself using it a lot and posted it to Medium at

https://davidjhburden.medium.com/an-ai-landscape-what-do-you-mean-by-ai-b338908cce99

I think I'll post all my long form pieces to Medium as its quite a nice platform to write and read things in, and probably gets more reach than the blog! But I'll always post links here as things get published.

10 November 2021

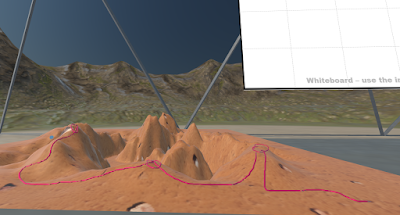

3D Immersive Visual Analytics White Paper - Just Updated

We last updated our 3D Immersive Visual Analytics White Paper in 2017! A lot has happened since then so it was well due an update - even though 80% of the paper covers the fundamentals which a) haven't changed and b) still seem to be learnt by many people.

Although we stopped selling and using Datascape a couple of years ago the use of VR for data visualisation is still something that is very close to my heart and that I'd like to do more work on. My first steps in using WebXR are a move in this direction, and the current Traveller Map WebXR demonstrator is actually a data-driven app which has a lot of the key features in it needed for a WebXR IVA system. I'll hopefully get some development time over the next few months to pull a proper demo together.

In the meantime you can revisit some of our immersive 3D visualisations of the past at:

Read the updated 3D Immersive Visual Analytics White Paper now!

8 November 2021

David speaking at CogX on Virtual Humans and Digital Immortality

Somehow forgot to blog this when I did the talk at CogX back in 2019 but still highly relevant and been linking to it from the updated website so I thought I might as well post it up here as well.

1 November 2021

Jon Radoff's 7 Layer Metaverse Model and Market Map

Jon Radoff has an interesting "7 layer model of the Metaverse" at https://medium.com/building-the-metaverse/the-metaverse-value-chain-afcf9e09e3a7 and a mapping of the recent Facebook/Meta announcements at https://medium.com/building-the-metaverse/facebook-as-a-metaverse-company-d5712198b22d.

Needless to say we all have our own variations/comments on this. For me I think that Infrastructure/Decentralisation/Spatial should be the bottom 3 layers, and really the Human Interface should be the outer one - you don't (or shouldn't) build you virtual experience on the human interface, you should build the experience and then open up to as many interface types as you can. Discovery could perhaps be split between internal discovery (how you find out about stuff in the world) and external discovery (how you find out about experiences, and particularly how one experience links to another). And should the Creator economy sit on top of the experience as in a true Virtual World prople are using the experience to create the economy, and then enhance the experience (so circular!). Perhaps something more like a network model would be more realistic (if less eye-catching) given the interconnectivities involved - it's not quite the ISO 7 Layer model diagram.

I'll have to dig out one of my old models.

Jon also has a Market Map of the Metaverse at https://medium.com/building-the-metaverse/market-map-of-the-metaverse-8ae0cde89696 - which is likely to be quite dynamic!

Jon also has a Metaverse Canon Reading Guide at https://medium.com/building-the-metaverse/the-metaverse-canon-reading-guide-9eb1b371b505

29 October 2021

Facebook (aka Meta) Connect2021

|

| Me at Connect21 in Venues* |

*although you could see the video in-world it deoens't show on the photos you take

I attended the Facebook Connect2021 event in Oculus (now Horizons) Venues yesterday afternoon. Struck me that there were a lot more people in VR than last time, around 1200 were meant to be there, although we were all sharded into ~ 20 people pods (and they were on 2 levels so you really only saw ~ 10).

Needless to say the whole name change thing and the relentless focus on the Metaverse made it a bit more weighty than the last one I went to which just introduced the Quest 2, but apart from Mark Zuckerberg finally "getting" virtual worlds there was really little there that wasn't being talked about 10 years ago. Sure the tech is moving on but there are still lots of areas which need to be sorted.

So here are my highlights/comments on the talk, in roughly timeline order.

- Yep, pretty much everything talked about in terms of what you could do in the VW element of the Metaverse you could do in SL 15 years ago

- The one big difference, and which would be cool, is the better integration of your desktop into VR and bringing your phone into VR so that you can stay in world whilst checking "RL" information or having chats with people in "RL"

- Zuckerberg talked about the metaverse as being the "replacement" for the mobile internet, but I think despite the fancy nature of the "metaverse", even when implemented through MR glasses we'll still find the unobtrusive nature of the smartphone (along with battery life etc) to be valuable, so I think they'll just be parallel streams into the meta-metaverse!

- He talked about interoperability in terms of being able to bring avatars and virtual objects between worlds - but it wasn't clear if this was between FB/Meta ecosphere applications only, or out to third party virtual worlds. I fear the former. At the ReLive conference in 2011 we highlighted interoperability as the key medium term need - and we're still not there!

- Some nice quick videos of the various Horizons platforms, with Rooms going from single to multi-user, and it looks like Spaces will have a Scratch type scripting system, which will be great news as long as its powerful enough for decent work.

- Also some nice hints about better tools to enable 2D progress web applications to display within the 3D/VR environment, further breaking the barrier between the 3D/VR and 2D/RL environments!

- The whole presentation kept switching from RL to greenscreen to Horizons to smoke and mirrors, by the end I wasn't sure if Mark was really an avatar or not and you certainly couldn't tell what was real tech and what was marketingware.

- There seemed to be a lot of emphasis on "holograms" and going to real concerts/meetings as holograms with real friends/colleagues. It was at all clear on how that was ever going to work, although the AR glasses would sort of make that feasible. Mind you having tried to stream a bit of video out of a concert recently I can't see how the bandwidth will ever be there! Oh, virtual after parties - tick, done that, great one after The Wall show in SL "back in the day" actually on the stage set.

- The lack of a VR keyboard in Venues itself (and most VR apps) was keenly felt as it meant that the dozen or so of us there could really interact whilst the session was going on. Meta may have a solution - see below.

- A big announcement for the short term was that Oculus products will not now mandate FB accounts, so the concerns that many corporate/academic clients had might go away. They will also continue to allow sideloading onto Quest, so it looks like the Quest will remain a more open platform and not be locked down to FB/Meta - hurrah!

- Lots of stuff on their "presence" SDKs to help developers better integrate controllers, MR /pas-through features and voice control. "Presence" was emphasised a lot, more than immersion (just like we've been telling a recent client!)

- Project Cambria will be their next generation high-end headset, non-tethered but more expensive that the Quest, although they hope features will gradually drop down to the Quests. Hi-rez, colour pass-through to better enable MR applications seems the key differences, plus the sensors to detect facial expression and eye-movement for more natural avatar interaction, nice.

- Project Lazare is their AR/MR glasses project, heading for full Hololens type MR but in a spectacles form-factor. This is what would make their "holograms" believable - as long as you put glasses on all the RL people.

- A list of futures "breakthrough" areas for VW tech looked very generic, everything talked about was evolutionary, and interestingly was missing neuro-interfaces tech.

- Then he talked about neurotech (!), or specifically electromyography (EMG) and a device which looked suspiciously like the old Thalmic Labs MYO. Still have mine (see photo below). This senses what your fingers are doing from the electro-neural impulses picked up at the wrist. Some nice demos (?) of subtle gesture control, including typing. In theory you need hardly actually move your fingers at all. One of the more interesting things shown. Does it work for feet and locomotion too?

- The Codec photo-realistic avatars looked quite good - volumetric and based on face/body scans, but with the ability to then change hair, clothes etc. The clothing demo and talk about hair modelling reminded me of how much we wrote about those areas in our Virtual Humans book.

|

| My old MYO |

Needless to say all the bad press side of Facebook, privacy and corporate greed didn't get a look in (although there was a snide comment about high-taxes (!) stifling growth and innovation), but he did use the words "humble" or "humbling" more times than I could count, and Nick Clegg popped up for about 30 seconds to say not very much. As some of our recent work has highlighted for a client VR offers unparalleled insights into personal behaviour and its vital that our explicit and implicit data is secure and not being exploited, and the "metaverse" is not under the control of one company. If Facebook was really serious about the metaverse they'd open source the whole lot right now.

You can watch the presentation for yourself at https://www.youtube.com/watch?v=Uvufun6xer8&ab_channel=Meta

Full set of Connect21 presentations on the more technical side at https://www.facebook.com/watch/113556640449808/901458330492319/

Update: Looking at the World Building in Horizons video it really is very low rez at the moment, possibly even less than Hubs, and talk of strict performance limits. So good for some stuff but a whole load of use cases wiped right out (for now) :-(

27 October 2021

WebXR Wars

Interesting piece in Immersive Web Weekly on the "rift" between the Oculus/Chrome implementation of WebXR and the Firefox/HTC/others version.

Today XR headset makers face a tough choice among immersive browsers: invest a considerable number of people to maintain a custom Google Chromium-based browser with great WebXR support (as Facebook does for the Quest) or port a Mozilla Gecko-based browser like Firefox Reality and deal with XR support that hasn't changed in any substantial way since Mozilla's Mixed Reality team was laid off more than a year ago.

Last week Shen Ye followed the path of the Pico team in revealing that HTC's new Vive Flow headset will ship with Firefox Reality and Stan Larroque also announced in a YouTube livestream that the Lynx team is working on a Firefox Reality port for their headset.

HTC, Lynx, Pico and Mozilla do not intend, as far as I can tell, to update Gecko's WebXR implementation. The divergence between Chromium- and Gecko-based browsers has already fragmented the fetal immersive web, forcing developers to choose between supporting only one browser engine or writing what is effectively two separate rending and input handling paths for their code. If we want a healthy and open immersive web then this must change.

(editor's note for transparency: I was the original product manager for Firefox Reality but left Mozilla before the layoffs. I have donated to the Mozilla Foundation for more than a decade and continue to do so but have no equity or other financial ties.)

We've bumped into this at Daden when looking at using Pico for a potential project. Pretty typical of the industry, agree a new standard and then immediately split it in two!

8 July 2021

RAF's Stories from the Future - Complete with Virtual Personas

The Royal Air Force has just released a second edition of their "Stories from the Future" - fiction pieces designed to get people thinking about the future of the Services and Air Defence.

One of the stories, "Heads Together" draws on some of the work we've done for MOD around the concept of virtual personas:

"Diverse viewpoints make for better decisions, so imagine a world where the whole of society engages with Defence through some form of service and the friends that you make there can convene virtually when you need to discuss a problem, whether they now work in Defence, in industry, in academia – or at all. In this tale, we look at how our people might benefit from this in the future"

The question that the article explicitly poses at the end is:

How would you feel about bring perpetuated in virtual form after you had changed jobs, left Defence or even died? Would advice from your virtual self be a liability to your real self?

You can read this, and the other stories at https://www.raf.mod.uk/documents/pdf/stories-from-the-future-second-edition/

22 April 2021

Daden Newsletter April 2021

Our April Newsletter is out. In this issue:

- A Virtual Experience Design Space (VEDS) - VEDS is a new publicly and freely available space we've created in Mozilla Hubs for you and us to use to help think through and collaborate on the design decisions associated with a 3D virtual experience - whilst being inside a 3D/VR virtual world!

- Wonderland - Having looked at the range of SocialVR spaces out there we're now looking at WebXR authoring tools - this issue we take Wonderland out for a test-drive.

- Daden Hub - Daden Hub is effectively our virtual office in MozillaHubs - somewhere for us to meet clients and show them what Hubs can do - and feel free to check it out yourself. We've also linked it to (and from) a virtual onboarding experience we've built for Hubs to act as a walk-through tutorial on how to use Hubs.

- Plus some interesting papers that caught our eye on AI, chatbots and knowledge graphs (and which are coming from a similar to our own R&D); and links to three interviews/panel sessions that David has given over the last few months on chatbots and Virtual Humans.

We hope you enjoy the newsletter, and do get in touch if you would like to discuss any of the topics raised in the newsletter, or our products and services, in more detail!

You can read the Newsletter here.

22 March 2021

360 Photos in Mozilla Hubs

Been experimenting a bit with 360 photos in Mozilla Hubs. They are just part of an empty room, so you can add 3D objects "inside" the photo, although without any scripting you can't create hot-spots as such. You could though add links from one to another - but on its own that's not a smooth transition.

What does work well though is the ability for the room owner to load scenes around the participants, so you can do an immersive slideshow, everyone starting in one place, and then load a 360 around them, and then another one and so on. If you added a map or 3D table model like the one below you could end up doing quite a nice human-guided field trip/battlefield tour/holiday slideshow.

The photosphere shots here are ones I took on my iPhone at the base of Uluru, and apart from a few glitches at the joins they come out pretty well in Hubs.

Of course the nice thing about using Hubs for something like this is that its very social and involving - everyone sees the same image at the same time, can see and talk to others, and naturally tends to move around the space towards the bits of the photo they are interested in so larger groups naturally fragment to smaller groups if desired - which with spatial audio enables lots of parallel conversations to go on.

And here's more of a heritage/built environment shot from Hougoumont Farm on battlefield of Waterloo.

Just get in touch if you've got a good idea of how you might be able to use this approach to 360Vr in your teaching/training/education/out-reach.

17 March 2021

IORMA Webinar: Chatbots, Virtual Humans and Consumers

Daden MD David Burden took part in the panel at last week's IORMA Consumer Commerce Centre webinar on Chatbots, Virtual Humans and Consumers. The webinar is available on YouTube at https://youtu.be/sPZQAvale1I:

As well as David there is some great expertise on creating lifelike avatars using some of the latest Hollywood derived technology, and also on the use of speech recognition and NLP to detect anxiety and truthfulness. It's a wide ranging and dynamic conversation and well worth an hour of your time!

15 March 2021

David Burden on the Dr Zero Show

Daden MD David Burden was interviewed about virtual worlds and virtual reality on the Dr Zero YouTube show hosted by Serdar Temiz over the weekend. David's piece starts at 34:42

25 February 2021

Introducing Daden Hub - Daden's new virtual home in Mozilla Hubs

This 3D environment lets you find out about Daden and what we do, play with some of the Mozilla Hubs tools, and follow teleports to some of our other builds in Mozilla Hubs.

We are increasingly using it instead of Zoom for some of our internal meetings, and don't be surprised if we invite you into it for external meetings too!

You can visit Daden Hub now in 2D or VR at https://hubs.mozilla.com/SJy5Hwn/daden-hub.

Here's a short video giving you a tour of the Hub.

Give us a shout if you'd like to meet up in the space and get a more personal demo!

19 February 2021

A Tale of Two Venues - AI/SF and Wrestling in VR!

I went to the Oculus Quest2 launch event in an earlier Beta, and it was OK but very basic, just move your avatar to a row of cinema seats and sit there. So what has changed?

You start with an avatar design session in your own room (not much choice of anything, but just enough to minimise identikit avatars), and then some very basic movement and interaction instruction. There is a very nice menu UI on your inside wrist which opens up to give you access to the main tools. You can navigate either by a local teleport method (move a circle to where you want to go) or free physical/joystick-based movement.

When you leave the room and new space loads and you enter the Venues atrium.

There's space for I think about 8 "suites", 4 down each side. I don't know how shared this is. My venue had the poster outside and declared 38 attendees.

Entering the suite another space loads. I say "suite" as there is a ground level space and a balcony space, both looking out to a big 2D screen where the Zoom relay was - 3 talking head webcams.

Now as an event experience it was pretty poor. The difference between the 3D/avatar us a 2D/video them is big. I know you might pick up less nuances if they were avatars but at least it would feel more like a seminar than a cinema. And if you were on Zoom you could ask questions by chat, but there was no chat facility in VR (and without a Bluetooth keyboard typing questions would be a pain). Also the lack of chat meant that there was no side channel between the attending avatars - amplifying points, sharing links, getting to know each other. If someone tried to talk to you it just got in the way of the presentation - just as in the physical world. I know I keep saying it but c.2010 Second Life events felt far better.

|

| Unfortunately the Venues screenshot camera doesn't show the video content! |

The other big issue were my co-attendees. I know I'm the only person on the ground level who was there from beginning to end. Maybe a handful of others were there for 20mins plus. I'd say peak occupancy was a dozen, more often half that. Most of the people though were there to play and have fun, several were kids (mics on whole time, talking over the presenters etc), and were just having a laugh. Imaging trying to attend a seminar whilst a group of tweens charges through. Luckily at least one group decided to "take the party upstairs" and when I checked in at the end the balcony certainly seemed busier - but that was after the talk finished.

So not convinced.

On my way out I decided to check out the other suites. Only one was in use with 24hr Wrestling. Same layout. But a couple of big differences.

First, the video was 360 style, in fact I think it was even stereoscopic, so it really did feel like you were ringside watching the fight. It filled the whole of the space in front of you, and had a real sense of depth.

Second, as there was no commentary as such, it was just the fight, all the avatars chatting, shouting and fooling around with the cameras and confetti was all appropriate for the event - everyone was ringside!

|

| The Wrestling crowd - not that you can tell with no video! |

So I hadn't expected that a Wrestling event would beat an SF/Tech/Games event as a good demo of using VR for events - but it did. Just goes you need to think about the complete experience when looking at how to use VR (and immersive 3D) for different kinds of events.

18 February 2021

NASA's Perseverance Rover in Mozilla Hubs

What better way to celebrate the (hopefully successful) landing of NASA's Perseverance rover on Mars than by looking at it in Mozilla Hubs!

NASA wonderful model library at https://nasa3d.arc.nasa.gov/ not only has a nice model of Perseverance but its also a) a reasonable size and b) in the .glb format preferred by WebXR apps likeHubs - this is great news if NASA is now standardising on this format. The model is about 4x the recommended Hub size, so mobiles may have issues, but it loads in 20s or so.

As this is a quick build we've just dropped the model into our existing "mars-like" terrain. We checked it for scale and it looks pretty much spot on. We've not added any interpretation boards - we may do that later.

If you're in VR then you can have great fun getting on your hands and knees to look under the rover - although we couldn't spot Ingenuity.

The model is at: https://hubs.mozilla.com/o3c26nJ/perseverance-mars-rover with a room code of 817861 if you're using a headset.

Remember that since this is WebXR you can just click immediately on the link above to go to Mars in your browser, no need to download or register, and it works with or without a VR headset.

Have fun, and do let us know how you get on in the comments and/or post images on Social Media and share with friends.

And don't forget to also check out our SpaceX Starship Hub room to look at the next generation of Mars spacecraft. That's at https://hubs.mozilla.com/SMUKcDy/starship-test.

Update: Here's a video of the rover from inside our VR headset:

16 February 2021

Virtual Humans interview on the 1202 Podcast

Daden MD David Burden is interviewed on the 1202 Human Factors podcast about our work on Virtual Humans. You can listen to it at:

https://podcasts.apple.com/gb/podcast/virtual-humans-should-we-be-concerned-an-interview/id1478605029?i=1000538768732

12 February 2021

SpaceX Starship in MozillaHubs

Having been quiet pleased with the size of terrain mesh we could bring into MozillaHub we decided to try and use a more substantial 3D model - in this case of Elon Musk's SpaceX's Starship spacecraft. The model is by MartianDays on Sketchfab, and whilst not the highest rez (we were trying to avoid that!) it does look pretty good. For the record it's 20,600 triangles, which compares to 1.2 million (!) for a hi-rez model.

Again we're quite impressed at how well its come in. Load times are pretty variable from 30s to 1 minute, sometimes 2 minutes, but it displays very smoothly once you're in there. And with a VR headset on, looking all the way up to the top you get a real sense of the scale of the thing. We've lifted it off the ground so you can walk underneath to see the Raptor engine bells.

If you want to take a look - in a browser or in VR - go to:

https://hubs.mozilla.com/SMUKcDy/starship-test

The hub.link code is 416509 for easier access in VR.

10 February 2021

Introducing VEDS - A Mozilla Hubs based Virtual Experience Design Space

iLRN Attendees: The link to the session is: https://hubs.mozilla.com/PNaoEvs/daden-virtual-experience-design-space - this will launch you straight into Mozilla Hubs. If you're in VR the hub.link code is 563553.

If you haven't been in Mozilla Hubs before we also have a tutorial space at: https://hubs.mozilla.com/fFVfvrq/onboarding-tutorial

On the basis of practice-what-you-preach we've created a prototype Virtual Experience Design Space (VEDS) in Mozilla Hubs and opened it up to public use. The space takes a number of design tools that we've used for many years, and which we already have within our Daden Campus space in Trainingscapes but makes them available to anyone with a browser (including mobile) and any WebXR capable VR headset (such as Oculus Quest). Of course the nice thing about WebXR (and so Hubs) is that everything runs in the browser so there is no software to download - even on the VR headset.

Here's a video walkthrough of the VR experience.

Within the space we have four different 3D immersive experience design tools:

- A dichotomies space, where you can rank different features (such as linear/freeform or synchronous/asynchronous) against a variety of of different design factors (such as cost, importance, time, risk).

- Sara de Freitas's 4D framework for vLearning design

- Bob Stone's 3 Fidelities model

- Bybee's 5E eLearning design model

8 February 2021

Social Virtual Worlds/Social VR

The last couple of years have seen a dramatic increase in the number of social virtual worlds around. We define such platforms as internet based 3D environments which are open to general use, are multi-user (so you can see and talk to other people) and within which users are able to create their own spaces. The worlds are typically accessible by both a flat screen (PC/Mac, and sometimes mobile/tablet) and through a VR headset.

At Daden we’ve been using these sorts of environments since the early 2000s. What is really beginning to change the game now is the emergence of WebXR social virtual worlds. These are completely web-delivered, so you only need your browser to access them (from PC, mobile and even VR headset), so there is no download, minimal differences between platforms and really easy access. They are emerging as a great way to get people off of Zoom and into somewhere more engaging for whatever social or collaborative task you need to do. The key examples at the moment are probably Mozilla Hubs and Framevr.io.

Below we highlight some of the key affordances, benefits and uses of these WebXR Social Virtual Worlds.

- Fully 3D, multi-user, avatar based, with full freedom of exploration

- In-world audio (often spatial) and text chat

- Runs without any download – and even on locked down desktops

- Graphics optimised for lower bandwidths and less powerful devices

- Out-the-box set of collaboration tools, eg*: screen-share, document-share, whiteboard, shared web browser (*depends on world)

- Free access model for low usage, many also open source

- Developer ability (us) to build custom environments

- Limited scripting ability at the moment

- Give meetings more of a sense of “space” than video calls

- Use environment and movement to help anchor the sessions and learning in memory

- Help train what you can’t usually teach in the classroom

- Excite and engage students and employees

3 February 2021

DadenU and SocialVR Try-Out: Spatial

As part of my DadenU day I thought I'd try out another SocialVR environment - this time Spatial (not to be confused with vSpatial which is a VR "virtual desktop" app). Spatial is downloaded as an app onto the Quest. The web client only works in "spectator" mode rather than giving you a full avatar presence.

A really nice touch is that your available "rooms" show as little mini-3D models to choose from (see above). However this doesn't look to be scalable as they are a fixed selection of shapres and actually only emphasises the pre-canned nature of the environment.

Another "USP" is that it takes a webcam image to generate your avatar. OK I didn't try to optimise it and was probably look up too much but the result was very uncanny valley... And you can see from the arm position why many apps have gone for only head-body-hands, missing the arms.

There are a number of fixed office/meeting room type environments to use, but no option I can see at the moment to make your own. There is a big display board, but it wasn't really clear how that worked. "Spectators" appeared in world as their webcam feed above the board. You could separately rez post-its, a shared web browser, images from your desktop etc. There's also a library of 3D models which I guess you can add to - but not too obvious how.

Navigation seemed very clunky with step wise rotation on the joystick - hard to manoeuvre in a space which albeit small is still larger than my room to freely wander in VR in.

And that's sort of it.

As a step towards a very business orientated VR space it's not bad, if they can improve the typical avatar capture to the quality that seems to be in the promotional shots that would be great, and a scalable version of the room models would be wonderful to see more widely. But why be a slave to the real-life model - their "Mars" planning room has a small model of a crater, and a small model of Curiosity - why not have both full scale (and the crater small scale) and have the meeting on Mars?

Here's my initial take on Spatial plotted on our radar diagram, and below some key thoughts on each measure.

- Accessibility/Usability - Headset download, reasonably intuitive but clunky movement

- Avatars - nice try at scanning but pretty uncanny valley

- Environment - nice looking but fixed and few

- Collab Functions - reasonable starter set but could improve

- Multi-Platform - really only VR for full functionality

- Object Rez - fixed library only?

- Open Source/Standards - doesn't seem it

- World Fidelity - none, non-persistent rooms

- Privacy/Security - room ownership/invites only

- Scripting - none

1 February 2021

Evaluating 3D Immersive Environment and VR Platforms

As part of our work on a forthcoming white paper looking at the ever-growing range of 3D immersive environment platforms available we thought it would be useful to identify some criteria/function sets to help make comparison easier. If you were to use these as part of a selection exercise then you'd probably want to weight them appropriately as different use-cases would need different features. We've also assumed that all offer a common feature set, including multi-user and text/voice communications, walk/run, screengrabs etc.

A lot of these categories are quite encompassing, and a further analysis may split them down into sub-categories - or create more higher level categories where a particular feature becomes important. We may well revise this list as we work through the platforms (at least 25 at the current count) but here's our starter for 10 (in alphabetical order).

A/accessibility (with a lowercase a) and Usability

Avatar Fidelity & Customisation

Environment Fidelity and Flexibility

Meeting and Collaboration Functions

Multi-Platform Support

Object Rez, Build and Import

Open Source/Standards Based/Portability

Persistency/Shared World/In-World Creativity (World Fidelity?)

Privacy and Security

Scripting and Bots

Cost

Radar Plot

|

| Radar Plot - Mozilla Hubs |

|

| Radar Plot - Second Life |

A Starter for 10

29 January 2021

Jan 2021 Newsletter Out

The January 2021 edition of our newsletter is out. In this issue:

- Using VR for nurse training - A case-study of our work at Bournemouth University in developing a 3D and VR simulation to help student nurses learn how to manage a diabetic patient on the ward and cope with a related emergency

- VR in the Training Mix - How immersive 3D and VR stack up against more traditional approaches when it comes to addressing the needs of the "3 fidelities" of skills training

- SocialVR Worlds - Our latest experiences inside the emerging Social Virtual Worlds such as AltSpaceVR and MozillaHubs - and what potential they might have for more serious use

- Plus snippets of other things we've been up to in the last 3 months - including links to our presentations at the Giant Health event, and a look at our ever growing library of health training related assets.

You can download and read it here.

25 January 2021

Case Study - Social Worker Home Visits at the OU

Home visits are a key part of a social worker's life, but are notoriously hard to train for. Whilst roleplay can help the fact that the role-play is typically done in the classroom loses the immersion, and its only available when the course sets it up. Creating a home visit in VR eliminates both these issues - offering full immersion and 24/7 learning.

Over the last year we've been helping researchers at the Open University build a home visit learning experience for their social work students, so that the students can work through a typical home visit (with support case notes and messages from colleagues) to try and work out what is going on.

The students get to talk to the mother, father and two of the children. Whilst we've used audio as well as text for the dialogues so that students can better judge emotional cues we've also implemented an "emotional barometer" alongside the chat to make plain text chat sections more able to convey the same sort of information.

You can read more about the project by downloading the case study.

18 January 2021

Case Study - Virtual Field Trips

Though we'd refresh our case study of the Virtual Skiddaw project we did for the Open University a while back. The simulation modelled 100 sqm km (!) of the UK Lake District with 6 sites modelled at about ~1cm accuracy with photogrammetry. Students can pick up hand samples, look at virtual microscope slides, fly (!) around the environment and even lift up a cross-section of the rock!

The case study is downloadable here.

There are also a couple of great videos - a narrated walkthrough by the OU's geology lead on the project.

And the OU's Geology and vLearning leads discussing the project.

The app is still in use with students at the OU.

11 January 2021

Immersive 3D Visual Analytics in WebXR

Whilst this application was originally built as a side project it shows some principles and ideas which are probably worth sharing here. The application uses WebXR to display data in VR. The data is fetched from a web service, and the user can choose what data parameters are used on two of the point features, in this case size and height. The key thing about WebXR is that it lets this visualisation run in a VR headset without any download or installs. Just point the in-built browser at the URL, click on Enter VR and the browser fades away and you are left in a 3D space with the data.

The data itself is the star map used in the classic GDW Traveller game (think D&D in space) - but as we say the data isn't important, just the novel interaction.

To try out our Data Visualisation in WebXR just use the browser in your WebXR compatible VR headset to get to this page, and then click on the link below. Once the new page loads just click on the Enter VR button.

Note that whilst the page will open in an ordinary browser you won't be able to do much. But watch the video here to get an idea:

You can also read more about 3D Immersive Visual Analytics and the differences between allocentric and egocentric visualisation in our white paper.